Services on Demand

Journal

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

South African Journal of Childhood Education

On-line version ISSN 2223-7682Print version ISSN 2223-7674

SAJCE vol.4 n.3 Johannesburg 2014

ARTICLES

The reading literacy profiles of first-year BEd foundation phase students

Carisma Nel*; Aninda Adam

North-West University

ABSTRACT

Reading is not simply an additional tool that students need at university; it constitutes the very process whereby learning occurs. Pre-service students are required to read prescribed course material which is predominantly in English. An overview of the reading literacy profiles of first-year BEd foundation phase students from diverse language backgrounds in a Faculty of Education Sciences indicates a diverse pattern of strengths and needs in terms of pseudo-word reading, spelling, oral reading fluency, vocabulary size and depth, and reading comprehension. An assessment and support rocket system for addressing the academic reading literacy needs of these students is discussed. This system seeks not only to facilitate success for all students, but also to fulfil requirements of quality student outcomes and timely completion and throughput rates.

Keywords: reading, profiles, pre-service teachers, assessment, academic support

Introduction

National and international assessment studies indicate that the reading achievement of South African learners is a major cause for concern (Howie, Van Staden, Tshele et al 2012; RSA DBE 2013). According to Green, Parker, Deacon and Hall (2011:112),

[t[he provision of sufficient numbers of excellent teachers for the nation's Foundation Phase classrooms [...] appears to be one of the key strategic levers that South Africa could employ in order to improve learning outcomes in the Foundation Phase and beyond.

There is an increasingly widespread perception within higher education that the reading literacy skills of students from both English-speaking backgrounds and non-English-speaking backgrounds do not allow them to meet the demands of their degree courses and, subsequently, those of the workplace (Murray 2010; Scott, Yeld & Hendry 2007). This view is confirmed by Bean (1996:133):

Many of today's students are poor readers, overwhelmed by the density of their college textbooks and baffled by the strangeness and complexity of primary sources and by their unfamiliarity with academic discourse.

Readingis not simply an additional tool that students need at university; it constitutes the very process whereby learning occurs. In a university setting where students come from diverse language backgrounds but are required to read course content reading material in English at the same level of comprehension as a proficient L1 reader, there is a need for specialised support in reading literacy for the diverse student body. Institutions are finding that their systems are ill-equipped to respond to the needs of increasingly diverse students with increasingly diverse literacy and reading characteristics, backgrounds and degrees of 'reading readiness'. 'Reading' for a degree at university requires a systematic and comprehensive approach to supporting students; reading development should not be left to chance. Structures need to be put in place to ensure consistent and gradual development of academic reading skills for all students.

The purpose of this article is to report on the English (in other words, the dominant language of the majority of course reading material) reading literacy profiles of first-year Afrikaans-, Setswana- and English-speaking BEd foundation phase students in a faculty of education sciences, and to discuss the proposed initiative - a comprehensive assessment and support rocket system - for academic reading literacy support for diverse first-year students at university.

Reading literacy profiles of first-year pre-service foundation phase teachers

There is evidence that poor classroom instruction, particularly in the primary grades, is the core contributor to the high incidence of reading problems. Studies have attributed this to teachers' lack of a basic understanding of concepts related to the English language and which are necessary to teach reading skills (Bos, Mather, Dickson et al 2001; Moats 2000; Taylor 2008). Research also indicates that this lack of understanding may be attributed to inadequate preparation and support during university coursework at the pre-service level (Nel 2011; Van der Merwe & Nel 2012).

Scientific research shows that there are five essential components of reading (phonemic awareness, phonics, fluency, vocabulary development and reading comprehension) that children must be taught in order to learn to read (NICHD 2000). Despite the popularity of college and university students as research participants, studies of students' reading literacy typically have included measures of text comprehension only, not component skills (for example, Bray, Pascarella & Pierson 2004). Assessment profiles combine information from tests of several components to create profiles of students' strengths and needs in reading for instructional purposes and for student support (Strucker 1997). The information of tests of several components is then used to create profiles of students' reading ability (Chall 1994). The focus in this study is on a multi-component (word decoding, spelling, oral and silent reading fluency, vocabulary size and depth, and reading comprehension) reading analysis for the purpose of creating reading profiles of first-year pre-service foundation phase teachers for targeted academic reading support purposes. The theoretical framework utilised in this study focuses on understanding the interplay between university texts, tasks and readers within the sociocultural context of the university.

Word decoding accuracy and spelling

The relationship between word-level skills and comprehension is clear: students who either misread or skip unfamiliar words are at risk of failing to accurately comprehend a text. In a study of thirty community college students, most of whom were native English speakers, enrolled in developmental reading classes, Dietrich (1994) found that the students' average word analysis skill (that is, the ability to decode unfamiliar words) was equivalent to approximately a grade 5 level. Bell and Perfetti (1994) found that undergraduates were poor at reading non-words fast and accurately. Snow and Strucker (2000) suggested that poor decoding and slow reading might prevent students from meeting the everyday reading demands of postsecondary education, despite good performance on untimed tests of text comprehension. Spelling draws on the same base of phonological, orthographic and morphological knowledge as word recognition, but it is more difficult, because it requires production rather than recognition and because there are many alternate spellings of sounds (Ehri 2000).

Oral and silent reading fluency

The theoretical support for reading fluency as a prerequisite for comprehension can be found in automaticity theory (LaBerge & Samuels 1974; Rasinski & Samuels 2011) and verbal efficiency theory (Perfetti 1985). Both theories assume that attention and working memory, the two mental resources necessary for reading, are limited in capacity. After repeated exposure and with appropriate decoding skills, words and word parts are stored in memory as visual orthographic images. Such storage allows readers to bypass the decoding stage to quickly retrieve words from memory. This automaticity allows readers to focus on comprehension (ibid). In contrast, slow and laborious reading constitutes two major barriers to comprehension. Firstly, slower readers must focus their mental efforts on decoding, leaving limited cognitive resources for meaning-making (Apel & Swank 1999). Secondly, slow reading taxes short-term memory, as it is more difficult to retain the long and complicated sentences often found in university texts at slow reading rates than at rapid speeds (Strucker 2008). Whether oral or silent, the significance of reading fluency in reading is that it marks successful orchestration of certain subskills (for example, decoding and word recognition) necessary for comprehension (Grabe 2009).

Vocabulary

According to Nagy (2003:1), "[v]ocabulary knowledge is fundamental to reading comprehension; one cannot understand text without knowing what most of the words mean". The present study focused on the dimensions of size and breadth, which, according to Qian (2002:520), are two central aspects of word knowledge. Breadth of vocabulary refers to the number of words known, whereas depth of vocabulary (synonymy, polysemy and collocation) refers to the richness of knowledge about the words known (Stahl & Nagy 2006). Depth of knowledge focuses on the idea that, for useful higher frequency words, learners need to have more than just a superficial understanding of the meaning.

Goulden, Nation and Read (1990) determined that the vocabulary size of an average native English-speaking university student is about 17 000 word families (a word family being a base word together with its derived forms, for example, happy, unhappy, happiness), or as many as 40 000 different word types. Cervatiuc's (2007) study indicates that the average receptive vocabulary size of proficient university non-native English speakers ranges between 13 500 and 20 000 base words; the finding is comparable to university-educated native English speakers' vocabulary size, which is around 17 000 word families. Laufer (1998) argues that it is not possible to guess the meaning of words unless one knows at least about 95% of the neighbouring words. She maintains that students with fewer than 3000 word families (5000 lexical items) are poor readers, and holds that knowing more than 5000 word families (8000 lexical items) correlates with 70% reading comprehension. According to Henriksen (1995), a vocabulary of 10 000 words is necessary for academic studies, which is also the estimate obtained by Hazenberg and Hulstijn (1996) in a separate study on Dutch students.

Reading comprehension

Advanced academic studies require that students read a sizable volume of expository texts in order to deepen and build upon their knowledge of course contents. Carrell and Grabe (2002:233) refer to reading abilities as "critical for academic learning" and to L2 reading as "the primary way that L2 students can learn on their own". Reading comprehension - the process of constructing meaning from a text (NICHD 2000:4-5) - is the end goal of reading and relies upon at least some skill or ability in each of the other component categories considered as requisites for reading comprehension. For comprehension to occur, words must be decoded and associated with their meanings in a reader's memory. Phrases and sentences must be processed fluently so that the meanings derived from one word, phrase or sentence are not lost before the next one is processed. Reading comprehension is a highly complex process which integrates multiple strategies used by the reader to create meaning from the text.

Method of research

Design

A one-shot cross-sectional survey design was used in this study. Cross-sectional studies are observational in nature and are known as descriptive research; not causal or relational. Researchers record the information that is present in a population, but they do not manipulate variables. This type of research can be used to describe characteristics that exist within a population, but not to determine cause-and-effect relationships between different variables. These methods are often used to make inferences about possible relationships or to gather preliminary data to support further research and experimentation (Cresswell 2012).

Participants

A total of 343 (n=343) first-year students, comprising the 2011 and 2012 intake enrolled on a full-time basis for a Baccalaureus in Education (BEd) degree, participated in this study. The students all chose to specialise in foundation phase teaching (Grade R to Grade 3). The age of the students ranged from 18 to 22 years. The participants included first language speakers of Afrikaans, English and Setswana. The total number of participating students was divided into three groups based on their performance on the South African National Benchmark Test. The National Benchmark Tests (NBTs) were commissioned by Higher Education South Africa (HESA) for the purpose of assessing the academic readiness of first-year university students as a supplement to secondary school reports on learning achieved in content-specific courses (National Benchmark Tests Project 2013). The students in this study completed the academic literacy component of the test. The proficient group, scoring between 65% and 100%, consisted of twenty-five (7.28%) students (nine Afrikaans-speaking, four Setswana-speaking, and twelve English-speaking students). The intermediate group, scoring between 42% and 64%, consisted of 129 (37.61%) students (eighty-seven Afrikaans-speaking, twenty Setswana-speaking, and twenty-two English-speaking students). The basic group, scoring between 0% and 41%, consisted of 189 (55.10%) students (150 Afrikaans-speaking, twenty-six Setswana-speaking, and thirteen English-speaking students).

Measures, procedure and analysis

All profiling assessments were conducted in the reading laboratory of the university (Academic Services). First-year students must attend the reading lab for a compulsory 'Computer-Assisted Reading Instruction' period. The researcher and five trained research assistants conducted the benchmark assessments during these periods. One assessment per period was completed. The assessments took a period of two weeks to complete. The following instruments were used in this study:

The Difficult Non-word Test (Jackson 2005) was used to determine word decoding efficiency in an untimed test of accuracy in reading twenty-four pronounceable non-words that were either polysyllabic (for example, ramifationic), or, if less than three syllables, usually contained consonant clusters (for example, chamgulp).

Students were asked to spell thirty low-frequency words with regular (for example, vindicate), morphophonemic (for example, dissimilar), or idiosyncratic (for example, manoeuvre) spelling patterns (Jackson 2005).

A 100-word domain-specific extract from a prescribed text used as part of the BEd students' core curriculum, was used to assess their oral reading fluency. The extract was analysed by means of the Gunning Fog Index (18.5) and Flesch-Kincaid Grade Level (17.3) to ensure that it was at university level.

The Visagraph II eye movement recording system was used to determine the silent reading rate (wpm) with comprehension at which students read. The comprehension passages that were selected were at a first-year level.

The Vocabulary Size Test contains sample items from the 14 000 most frequently used word families in English. The test consists of 140 items (ten from each of fourteen 1000-word levels). It is designed to measure both first language and second language learners' written receptive vocabulary size, which is the vocabulary knowledge required for reading English. As each item in the test represents 100 word families, the students' scores on the test was multiplied by 100 to obtain their total vocabulary scores (Nguyen & Nation 2011).

The Depth of Vocabulary Knowledge (DVK) test was developed by Qian and Schedl (2004) based on the format of the word associates test (Read 1998). The DVK was designed to measure two aspects of depth of vocabulary knowledge: (1) word meaning, particularly polysemy and synonymy, and (2) word collocation. The DVK test used in this study contains forty items. Each item consists of one stimulus word, which is an adjective, and two boxes each containing four words. One to three of the four words in the box on the left can be synonymous to one aspect of the meaning, or the whole meaning, of the stimulus word; while one to three of the four words in the box on the right can collocate with the stimulus word. Each item always has four correct choices. In scoring, each word correctly chosen was awarded one point. The maximum possible score, therefore, was 160 for the forty items.

Reading comprehension was assessed using three complex texts adapted from chapters in the reading literacy domain: 'Important issues and concepts in reading assessment' (400 words); 'Best practices in fluency instruction' (350 words); and 'Best practices in teaching phonological awareness' (300 words). The texts were constructed in a maze format. The maze task differs from traditional comprehension in that it is based completely on the text. After the first sentence, every seventh word in the passage is replaced with the correct word and two distractors. Students choose the word from among the three options that best fits the rest of the passage.

Results and discussion

Table 1 gives an outline of the reading literacy profiles of the respective groups (proficient, intermediate and basic) of first-year BEd students. With regard to the students' decoding of pseudo-words, the results indicated that the proficient group scored 60%, the intermediate group 35%, and the basic group 21%. Not one of the groups managed to attain the guideline of 80% set by the faculty. Despite the fact that pseudo-words are not real words, pseudo-word decoding engages orthographic and orthographic-phonemic processes that are central to word identification. The spelling results were 58%, 36% and 19% for the proficient, intermediate and basic group, respectively. Not one of the groups managed to achieve the 80% guideline specified by the faculty. The performance on the spelling test was moderate to good for words with regular spelling; however, accuracy for morphophonemic and idiosyncractic words ranged from 0% to 44%. The results therefore indicated that students with low decoding accuracy also tended to have low spelling accuracy. The oral reading fluency results showed variation among the three groups: the proficient group scored 138.45 wcpm, the intermediate group 101.36 wcpm, and the basic group 85.23 wcpm. The proficient group was close to achieving the target set by the faculty.

The vocabulary size results showed that the proficient students achieved 8284.61, the intermediate group 6410.51, and the basic group 5991.21 word levels. Only the proficient group was close to the recommended 9 000 word levels required for university study. With regard to vocabulary depth, the proficient group obtained an average score of 101.54 out of a possible 160, while the basic group could only achieve an average of 64.40 words. The results indicate that the students face serious vocabulary challenges. Possessing limited vocabulary may expose these first-year university students to various challenges. Academic success is closely related to the ability to read. This relationship seems logical, because in order to obtain meaning from what they read, students need to have a large number of words in their memory. Students who do not have large vocabularies often struggle to achieve comprehension (Hart & Risley 2003). As a result, normally these students will avoid reading. In personal communication with the authors, the students indicated that they get their more proficient peers to do the course readings for them and pay them for summaries. Besides affecting reading ability, limited vocabulary knowledge may also influence writing quality, which is important to success at university. According to Linnarud (1986), vocabulary size is the single largest factor in writing quality. Similarly, Laufer and Nation (1995) found that students who have larger vocabularies use fewer high-frequency words and more low-frequency words than students with smaller vocabularies. The results of this study indicate that the deficiency in word knowledge of these students is not confined to size alone, but that the quality or depth of their word knowledge likewise suffers and even lags further behind their vocabulary size.

With regard to silent reading rate with comprehension, the proficient group read an average of 285.32 wcpm, with approximately 84% comprehension; the intermediate group read 189.45 wcpm, with 60% comprehension; and the basic group read 135.56 wcpm, with 40% comprehension. Only the proficient group performed according the faculty guidelines. The reading rate with comprehension indicated that, considering the typical university student's workload, this choppy and hesitant reading poses a practical barrier. Simpson and Nist (2000) report that 85% of college learning requires careful reading. Extensive reading is also needed, as students must often read and understand 200 to 250 pages per week to meet sophisticated reading tasks in writing assignments and research papers, and for preparing for tests at university (Burrell, Tao, Simpson & Mendez-Berrueta 1997). This workload is substantial even for students reading at 263 words per minute (Carver 1990), but may prove overwhelming for students with particularly slow reading rates. The students' reading comprehension of domain-specific texts indicated that both the intermediate and basic group were reading below 50% comprehension.

Overall, the results indicated that the first-year BEd students are diverse and that their reading literacy profiles are very uneven across the various components of reading assessed in this study. Some of the skills were on a par with the Faculty of Education Sciences guidelines and requirements, but many important skills were not. The results indicated that all students, even among the proficient group, need reading support in order to complete their studies successfully and master the skills they will one day have to teach to children in the foundation phase. The diverse profiles represent a significant challenge for the academic reading literacy support that should be provided at university level.

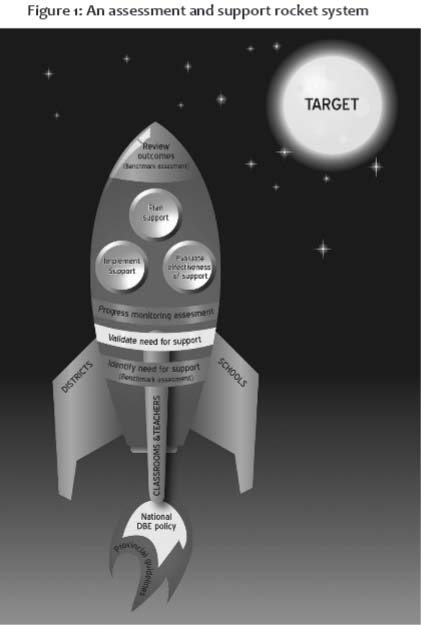

A comprehensive assessment and support rocket system

The assessment and support rocket system envisaged at higher education level is based on a rocket metaphor derived from a document entitled Reading is Rocket Science (Moats 1999). The aim of the rocket system is to ensure that all students achieve 'lift-off' and hit the identified targets, throughout their study period, on the way to reading success. The rocket system is designed to provide scientific, research-based interventions that lead to successful reading at university. The focus of the rocket system should be seen as developmental and preventative, and not as remedial. The rationale for a developmental focus is based on Alexander's (2005) lifespan orientation toward reading. This perspective looks at reading as "a long-term developmental process", at the end of which

[...] the proficient adult reader can read a variety of materials with ease and interest, can read for varying purposes, and can read with comprehension even when the material is neither easy to understand nor intrinsically interesting (RAND Reading Study Group 2002:xiii).

The rocket system's basic philosophy is based on the recognition that all students entering university need assistance in developing the necessary and appropriate reading skills for both the higher education academic context in general and, more importantly, the domain-specific context (for example, language/literacy teaching in the foundation phase) (Alexander 2005). The proposed rocket system aims to transform current teacher training within the foundation phase by promoting synergy between the stakeholders responsible for meeting the students' reading literacy support needs and their discipline-specific training at university, as well as partners in the workplace. The partnerships include those between the university (as teacher training institution) and the Department of Basic Education (DBE) (represented by the provinces, districts, schools and teachers in the classrooms). Within the university, where quality training is the norm, various stakeholders should collaborate to ensure more comprehensive and effective support to pre-service students, so that they may complete their studies timeously. These stakeholders include reading literacy specialists within the Reading Initiative for Empowerment (RIFE) research group of the Faculty of Education Sciences, who are responsible for profiling the students and providing evidence-based reading literacy interventions; the lecturers within the Faculty of Education Sciences, who are responsible for providing quality training in the domain of language/literacy for the foundation phase; and teaching-learning specialists in the academic support services division of the university, who are responsible for providing assistance related to computer-based reading programmes and coordination of the supplemental instruction (SI) component.

The flame of the rocket system is provided by national policy documents (for example, the Curriculum and Assessment Policy Statement, Foundation Phase (Grades R to 3)) and provincial guidelines for schools (for example, how to use ANA results to improve learner performance). During their pre-service training, students should be made aware of important national and provincial documentation and how to respond to these documents; they need to acquire both knowledge related to learning in practice (for example, work-integrated learning (WiL)) and learning from practice (for example, the study of practice) (see Figure 1).

To ensure that the rocket is stable before lift-off, three partners play a crucial scaffolding role: the district, the school, and the teachers in the classroom. The scaffolding partners should be involved in the pre-service as well as in-service training of student teachers in order to ensure quality foundation phase teacher preparation. Districts (that is, the partners who will employ the pre-service teacher) can be involved by sending subject advisors to visit the pre-service students at the schools during their WiL placement periods in order to provide subject-specific support as well as support related to general 'wellness' issues. Management team members at schools (that is, the future workplaces of the pre-service teachers) can mentor pre-service teachers on generic aspects related to teaching). Teachers in the classroom (where the pre-service teachers do their WiL training) can provide subject-specific mentoring. All these partners must work in collaboration with the university (that is, the partner responsible for providing quality foundation phase teacher training) to create an environment where students (that is, prospective teachers) are supported not only in enhancing their own reading literacy skills, but also in gaining evidence-based, discipline-specific knowledge based on knowledge and practice standards for teachers specialising in language/literacy in the foundation phase (see Figure 1).

The main compartments of the rocket system are the responsibility of the university, with significant inputs from the scaffolding partners (see Figure 1), and are based on the initial application of the problem-solving model to early literacy skills (Kaminski & Good 1998). The general questions addressed by a problem-solving model include (Tilly 2008:24):

• What is the problem?

• Why is it happening?

• What should be done about it?

• Did it work?

The first step is to identify the need for support. In order to identify this need, the reading as well as the teaching-learning specialists are responsible for administering benchmark assessments, including the National Benchmark Test, to all foundation phase students at the beginning of the year. Based on their profiles, students are flagged as either red (at risk), yellow (moderate risk), or green (no risk). The Faculty of Education guidelines are used as benchmark guidelines (see Table 1). The profiling is done by the reading specialists within the faculty, in collaboration with the teaching-learning specialists at academic services (reading laboratory). The results are then communicated to the lecturers responsible for teaching the domain-specific modules in the BEd foundation phase programme. Benchmark assessment represents a prevention-oriented strategy that identifies possible areas of concern before a substantial, pervasive problem is evidenced. Thus the benchmark assessment provides data that are used to identify individual students who may need additional reading literacy support to achieve the benchmark goals as well as course assessment requirements (for example, the language/literacy module in the BEd foundation phase programme). In addition, it also provides information regarding the performance of all students in a particular year group in the foundation phase with respect to benchmark goals.

At the systems level (that is, year-group level), the following question can be asked: Is the support system generally effective in assisting most students at first-year level to reach their reading literacy goals as well as the course/module outcomes? This is determined by the percentage of students who reach benchmark goals. If a large proportion of students score below the benchmark, system-level support may be necessary.

At the individual student level, the following question can be asked: Is the student likely to need more or different instruction to reach reading literacy goals and/or course outcomes? This is determined by each student's score relative to benchmark goals and course assessment requirements.

The next step is to validate the need for support. The aim is to rule out alternative reasons for a student's poor performance in order to be reasonably confident that he or she needs additional instructional support. It is important to examine a pattern of performance rather than a single score before making important, individual decisions about instructional support. Students' progress is monitored on a biweekly basis. The reading specialists are responsible for collecting data related to assignment marks, class tests, projects, and so forth, from lecturers. After eight weeks, all students are flagged again (red, yellow, green) based on the initial benchmark assessments as well as formative and summative assessments carried out during the intervening eight-week period, in order to ensure that all students needing specialised support are accurately identified.

The next step is to plan and implement support. The aim is to provide supplemental targeted support to those students identified as not making adequate progress, based on the evaluation during the previous steps. Students identified as needing support are assisted by the reading literacy specialists, who are responsible for focusing on small-group, scientifically-based reading research (SBRR) instruction to teach critical reading components (as identified by Kruidenier (2002), the National Institute of Child Health and Human Development (NICHD 2000), and the RAND Reading Study Group (2002)). Components taught include strategic reading, fluency, reading comprehension, vocabulary, text and language structures within expository texts, and reading strategies. Instruction is direct and explicit. The content used by the reading specialists is the prescribed material used by the lecturers in their modules. When students are referred for supplemental instruction and support, the teaching and learning specialists at the academic services division are responsible for implementing the supplemental instruction (SI) component (that is, identifying senior students to act as peer facilitators in modules identified within the BEd foundation phase programme which at-risk students are experiencing problems with) of the support, as well as continuous computer-based reading support. Biweekly meetings between the reading specialists, lecturers, teaching-learning specialists, and SI student facilitators ensure collaboration and engagement. If students are referred for supplemental instruction and support, lecturers are responsible for providing one additional period of small-group tutorials to the identified students. Each subject group has a chairperson who is responsible for the daily running of the subject group (for example, language, mathematics, and so on). The subject group chairpersons are also involved, to ensure that the students receive targeted support in modules where they experience reading-related problems. Subject advisors from the North West Department of Education (district-specific) and the head of the foundation phase at the partnership school are also closely involved with the identified students to provide more specialised support (for example, problems with language lesson planning). The reading specialists are also responsible for working with lecturers to assist them with the reading focus in their modules. Bean (1996:133) believes that lecturers need to assume responsibility for getting students to "read for the course". This includes making certain that assigned reading is course-related and that it teaches students the discipline-specific values and strategies that facilitate disciplinary learning. Developing students' ability to read higher order disciplinary-linked texts is a moot endeavour if students do not read course assignments.

The next step is to evaluate and modify the support as needed for individual students. The goal is to find a match between the support provided and the student's needs. The support is modified until the student's progress is sufficient to achieve both the benchmark goals and course outcomes. A key premise at this stage is that a good plan is a powerful starting place for lecturing, but an individual student's response to a particular curriculum or strategy, even a research-based curriculum or strategy, is unpredictable. No matter how good the plan, programme or strategy, if it is not supporting the student's progress toward the target goal or outcome, it needs to be modified.

The first step in evaluating support is to establish a data collection plan for progress monitoring. In general, for students who are receiving substantial, intensive instructional support, biweekly monitoring of progress is recommended; for those who need less support, less frequent monitoring may be sufficient - every other week or once a month (see Hall 2012).

The next step is to establish decision rules to use in evaluating data. Using a goal-oriented rule to evaluate a student's response to intervention is recommended, as it is straightforward for lecturers to understand and use. Decisions about a student's progress are based on comparisons of benchmark scores, which are plotted on a graph, and the aim line, or expected rates of progress. It is suggested that reading specialists, teaching-learning specialists and lecturers consider instructional support modifications when student performance falls below the aim line for three consecutive points (Kaminski & Good 2013) (see Figure 2). As when validating a student's need for support, a pattern of performance is considered before making decisions about individual students.

In addition to being a more reliable indicator of a student's skill level, ongoing progress monitoring places an upper limit on the amount of time an ineffective intervention is allowed to continue. For example, if data is gathered on a biweekly basis and a student is 'flatlining', lecturers and reading specialists will have useful information to assist them in deciding how to modify instructional support within two weeks, a relatively short period of time. It is however important to note that modifying support does not necessarily mean discontinuing an intervention. Especially if specialists are implementing an evidence-based programme, important alterable instructional support variables ought to be considered before adopting an entirely different approach. These modifications may include, but are not limited to, assessing fidelity of implementation, decreasing group size, spending more time on the content, or providing additional, explicit instructions and opportunities for practice.

The last step is the reviewing of outcomes. The aim is to review the structure of the support the faculty has in place to achieve outcomes at both the individual student level and the systems level (that is, the entire year group). For individual students, the lecturer and the specialists must decide whether the student has achieved the benchmarks and course outcomes and therefore no longer requires additional instructional support. At this stage, the lecturers and specialists will again review the benchmark assessment and course data, using the same procedures that are employed in the identification of a need for instructional support. The following organising questions can be used for reviewing outcomes at the systems level:

• What proportion of students is meeting benchmark goals?

• How effective is our core curriculum and teaching in supporting students who are on track to achieving benchmark goals?

• How effective is our system of supplemental programmes and teaching in assisting students who need additional support to achieve benchmark goals and course outcomes?

• How do the reading literacy skills and course outcomes displayed by students at a particular year level compare to those displayed by students in the previous year?

Conclusion

The most significant implications of this study relate to quality instruction and support. Given the profile of reading strengths and needs of the foundation phase pre-service teachers, it is important that teacher educators be prepared to teach these students reading skills and strategies in each of the reading component areas. Given that many students will need instruction in all reading components, but at different levels of intensity, universities must develop ways to provide an array of instructional alternatives that address students' varying needs. It is essential that lecturers have a clear understanding of the specific reading skill profile and instructional needs of this population and how these needs change over time and context. The assessment and support rocket system emphasises the importance of reading within the higher education context and acknowledges that all students need support with this skill due to its developmental nature. The rocket system focuses on helping students who have to do the majority of their core curriculum academic reading in their second and sometimes third language succeed within the higher education context. Each component of the rocket system provides a different level of support based on students' reading literacy needs - that is, it does not focus only on reading comprehension, but on all scientifically-based reading research components - and is monitored through the use of students' outcomes or data. Too often, scores are just sitting on the shelf. Merely assessing and not using the data to inform instruction and support is a waste of time. Lecturers need to know how to use the data, including making decisions about how to identify essential skills and how to provide support in the identified skill areas. In addition, the rocket system addresses the much criticised lack of collaboration between reading literacy specialists, domain-specific lecturers and academic support services at universities. It also provides for an additional stakeholder partnership, namely that between the faculties responsible for training teachers, the provincial departments of education responsible for employing teachers, and the schools responsible for assisting during work-integrated learning periods and ultimately the workplace of teachers. The assessment and support rocket system therefore encourages sustained collaboration rather than ad hoc collaborative efforts. Reading literacy teaching is framed as central to the way in which academic domains structure their knowledge bases. It is expected that the comprehensive assessment and support rocket system will ensure that students not only acquire the necessary reading skills to complete their studies in the required time, but also become more accomplished discipline-specific readers.

References

Alexander, P.A. 2005. The path to competence: A lifespan developmental perspective on reading. White paper commissioned by the National Reading Conference, Oak Creek, Wisconsin. [ Links ]

Apel, K. & Swank, L.K. 1999. Second chances: Improving decoding skills in the older student. Language, Speech, and Hearing Services in Schools, 30:231-242. [ Links ]

Bean, J.C. 1996. Engaging ideas: The professor's guide to integrating writing, critical thinking, and active learning in the classroom. San Francisco, CA: Jossey-Bass. [ Links ]

Bell, L.C. & Perfetti, C.A. 1994. Reading skill: Some adult comparisons. Journal of Educational Psychology, 86:244-255. [ Links ]

Bos, C., Mather, N., Dickson, S., Podhajski, B. & Chard, D. 2001. Perceptions and knowledge of pre-service and in-service educators about early reading instruction. Annals of Dyslexia, 51:97-120. [ Links ]

Bray, G.B., Pascarella, E.T. & Pierson, C.T. 2004. Postsecondary education and some dimensions of literacy development: An exploration of longitudinal evidence. Reading Research Quarterly, 39:306-330. [ Links ]

Burrell, K.I., Tao, L., Simpson, M.L. & Mendez-Berrueta, H. 1997. How do we know what we are preparing students for? A reality check of one university's academic literacy demands. Research and Teaching in Developmental Education, 13:15-70. [ Links ]

Carrell, P.L. & Grabe, W. 2002. Reading. In N. Schmitt (Ed.), An introduction to applied linguistics, London: Edward Arnold. 233-250. [ Links ]

Carver, R.P. 1990. Reading rate: A review of research and theory. San Diego, CA: Harcourt Brace, Jovanovich. [ Links ]

Cervatiuc, A. 2007. Highly proficient adult non-native English speakers' perceptions of their second language vocabulary learning process. Unpublished PhD thesis. Calgary: University of Calgary. [ Links ]

Chall, J. 1994. Patterns of adult reading. Learning Disabilities, 5(1):29-33. [ Links ]

Cresswell, J.W. 2012. Educational research. Planning, conducting, and evaluating quantitative and qualitative research. New York: Pearson. [ Links ]

Dietrich, J.A. 1994. The effects of auditory perception training on the reading ability of adult poor readers. Paper presented at the Annual Meeting of the American Educational Research Association, New Orleans, Louisiana, April 1994.

Ehri, L. 2000. Learning to read and learning to spell: Two sides of a coin. Topics in Language Disorders, 20(3):19-49. [ Links ]

Goulden, R., Nation, P. & Read, J. 1990. How large can a receptive vocabulary be? Applied Linguistics, 11(4):341-363. [ Links ]

Grabe, W. 2009. Reading in a second language: Moving from theory to practice. New York: Cambridge University Press. [ Links ]

Green, W., Parker, D., Deacon, R. & Hall, G. 2011. Foundation phase teacher provision by public higher education institutions in South Africa. South African Journal of Childhood Education, 1(1):109-122. [ Links ]

Hall, S. 2012. I've DIBEL'd, Now What? Boston, MA: Sopris West Educational Services Print. [ Links ]

Hart, B. & Risley, T.R. 2003. The early catastrophe: The 30 million word gap by age 3. American Educator, 27(1):4-9. [ Links ]

Hazenberg, S. & Hulstijn, J.H. 1996. Defining a minimal receptive second language vocabulary for non-native university students: An empirical investigation. Applied Linguistics, 1(2):145-163. [ Links ]

Henriksen, B. 1995. What does knowing a word mean? Understanding words and mastering words. Sprogforum, 3:12-18. [ Links ]

Howie, S., Van Staden, S., Tshele, M., Dowse, C. & Zimmerman, L. 2012. PIRLS 2011. South African children's reading literacy achievement. Pretoria: University of Pretoria Centre for Evaluation and Assessment. [ Links ]

Jackson, N.E. 2005. Are university students' component reading skills related to their text comprehension and academic achievement? Learning and Individual Differences, 15:113-139. [ Links ]

Kaminski, R.A. & Good, R.H. 1998. Assessing early literacy skills in a problem-solving model: Dynamic indicators of basic early literacy skills. In M.R. Shinn (Ed.), Advanced applications of curriculum-based measurement. New York: Guilford Press. 113-142. [ Links ]

Kaminski, R.A. & Good, R.H. 2013. Research and development of general outcomes measures. Paper presented at the National Association of School Psychologists' Annual Convention, Seattle, Washington, August 2013.

Kruidenier, J.R. 2002. Research-based principles for adult basic education reading instruction. Washington, DC: National Institute for Literacy. [ Links ]

LaBerge, D. & Samuels, S.J. 1974. Toward a theory of automatic information processing in reading. Cognitive Psychology, 6:293-323. [ Links ]

Laufer, B. & Nation, P. 1995. Vocabulary size and use: Lexical richness in L2 written production. Applied Linguistics, 16:307-322. [ Links ]

Laufer, B. 1998. The development of passive and active vocabulary in a second language: Same or different? Applied Linguistics, 19(2):255-271. [ Links ]

Linnarud, M. 1986. Lexis in compositions: A performance analysis of Swedish learners' written English. Malmo: Liber Forlag. [ Links ]

Moats, L.C. 1999. Teaching reading is rocket science: What expert teachers of reading should know and be able to do. Washington, DC: Thomas Fordham Foundation. [ Links ]

Moats, L.C. 2000. Whole language lives on: The illusion of "balanced' reading instruction. New York: Thomas B. Fordham Foundation. [ Links ]

Murray, N.L. 2010. Conceptualising the English language needs of first-year university students. The International Journal of the First Year in Higher Education, 1(1):55-64. [ Links ]

Nagy, W. 2003. Teaching vocabulary to improve reading comprehension. Urbana, IL: National Council of Teachers of English. [ Links ]

National Benchmark Tests Project. 2013. Benchmark levels. Retrieved from http://www.nbt.ac.za/ (accessed 20 January 2013).

Nel, C. 2011. Linking reading literacy assessment and teaching: Rethinking pre-service teacher training programmes in the foundation phase. Journal for Language Teaching, 45(2):9-30. [ Links ]

Nguyen, L.T.C. & Nation, P. 2011. A bilingual vocabulary size test of English for Vietnamese learners. RELC Journal, 42(1):86-99. [ Links ]

NICHD (National Institute of Child Health and Human Development). 2000. Report of the National Reading Panel. Teaching children to read: An evidence-based assessment of the scientific research literature on reading and its implications for reading instruction: Reports of the subgroups. NIH Publication No 00-4754. Washington, DC: US Government Printing Office. [ Links ]

Perfetti, C.A. 1985. Reading ability. New York: Oxford University Press. [ Links ]

Qian, D.D. & Schedl, M. 2004. Evaluation of an in-depth vocabulary knowledge measure for assessing reading performance. Language Testing, 21(1):28-52. [ Links ]

Qian, D.D. 2002. Investigating the relationship between vocabulary knowledge and academic reading performance: An assessment perspective. Language Learning, 52(3):513-536. [ Links ]

RAND Reading Study Group. 2002. Reading for understanding: Toward an R&D program in reading comprehension. Santa Monica, CA: RAND. [ Links ]

Rasinski, T.V. & Samuels, S.J. 2011. In S.J. Samuels & A.E. Farstrup (Eds.), What research has to say about reading instruction. Newark, DE: IRA. 94-114.

Read, J. 1998. Validating a test to measure depth of vocabulary knowledge. In A. Kunnan (Ed.), Validation in language assessment. Mahwah, NJ: Lawrence Erlbaum Associates. 41-60. [ Links ]

RSA DBE (Republic of South Africa. Department of Basic Education). 2013. Annual National Assessment. 2013 Diagnostic Report and 2014 Framework for Improvement. Pretoria: DBE. [ Links ]

Scott, I.R., Yeld, N. & Hendry, J. 2007. A case for improving teaching and learning in South African higher education. Higher Education Monitor, No 6:1-85. Pretoria: Council on Higher Education. [ Links ]

Simpson, M.L. & Nist, S.L. 2000. An update on strategic learning: It's more than textbook reading strategies. Journal of Adolescent and Adult Literacy, 43(6):528-541. [ Links ]

Snow, C.E. & Strucker, J. 2000. Lessons from preventing reading difficulties in young children for adult learning and literacy. In J. Comings, B. Garner & C. Smit (Eds.), Annual review of adult learning and literacy. San Francisco, CA: Jossey-Bass. 25-73. [ Links ]

Stahl, S.A. & Nagy, W. 2006. Teaching word meanings. Mahwah, NJ: Lawrence Erlbaum Associates. [ Links ]

Strucker, J. 1997. What silent reading tests alone can't tell you: Two case studies in adult reading differences. Retrieved from http://www.gse.harvard.edu/~ncsall/fob/1997/strucker.htm (accessed 3 February 2013).

Strucker, J. 2008. Theoretical considerations underlying the reading components. In S. Grenier, S. Jones, J. Strucker, T.S. Murray, G. Gervais & S. Brink (Eds.), Learning Literacy in Canada: Evidence from the International Survey of Reading Skills. Ottawa: Statistics Canada. 39-58. [ Links ]

Taylor, N. 2008. What's wrong with South African schools? Paper presented at the What's Working in School Development Conference, JET Education Services, Cape Town, November 2008.

Tilly, W.D. 2008. The evolution of school psychology to science-based practice: Problem- solving and the three-tiered model. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology. Bethesda, MD: National Association of School Psychologists. 17-36. [ Links ]

Van der Merwe, Z. & Nel, C. 2012. Reading literacy within a teacher preparation programme: What we know and what we should know. South African Journal of Childhood Education, 2(2):137-157. [ Links ]

* Email address: carisma.nel@nwu.ac.za